The New Reality

4 posters

Page 2 of 4

Page 2 of 4 •  1, 2, 3, 4

1, 2, 3, 4

Re: The New Reality

Re: The New Reality

Okay so that's the general gist.

So whilst you gaze at the sky looking for signs of those predictions you fall down a big hole left by some clumsy so and so .... bang - dead!

There is this thing that's been in existence since the dawn of mankind, it's called fate and it's there for a very good reason.

So whilst you gaze at the sky looking for signs of those predictions you fall down a big hole left by some clumsy so and so .... bang - dead!

There is this thing that's been in existence since the dawn of mankind, it's called fate and it's there for a very good reason.

Re: The New Reality

Re: The New Reality

for now, it will only find ways to make even more misery with the old and known knowledge, as we ourselves been able of.

all it still can do is remix old facts or rubbish to something we already have no need of. like colouring in old pictures or movies, but the result itself is not different from before of course, it still will be the same snapshot in time, or story in colours.

and why would we want to make a wild interpretation of an old picture or painting, when the original is there to look at.

for the wetter there is usually starting from the beginning of earth and all weather during its existence not even a second of that is covered into solid information. and would it really change when we can predict beter what weather will be out, or would it not simply more useful if we put effort in reacting to unforeseen happenings, and learn to handle and deal with?

you never can foresee everything, because so many things are simply already artificial, something simple as a dyke, it is always there to protect, until it gives way, you cannot check everything that can make that happen. and the first thing you loose after water walks in, is electricity, and going by experience even the hand held battery driven stuff does not last in wet circumstances, or only for short times. so it would be nice if people do not become so overly stupid they cannot handle that situation.

also human error is usually still small scale errors, but we already can notice what happened with a simple glitch.

also we have a complete false understanding of what and how many data are gathered, and by experience if there are nice full row of numbers, it means they come from just a few places, when you look in nature, there can be large differences in very small spaces, even next to each other. we are usually guided with just averages. and it are these that are fed to ai. together with all human errors hidden in it. so a weather forecast that tells about the place you are, is usually not that exact. it cannot even be.

to make that better you need a lot more points of observation. so until that happens , it can be still more efficient to just look out to the sky.

and yeah, google has a bit of a problem, you do not even need a vpn, to hide where you are, so if the weather forecast or actual weather is for that place, it gets a bit hard to get. i changed places at least 4 times today, without starting yup a vpn. and a few miles can be important in such things like the weather.

i have my own small weahter station, but the thing for outside i had to build a little cage for, so it things always it will give rain, and every evening it will tell me the sun is shining, well in reality that is just the light in the living room. but i have that sensor only for the winter.

all it still can do is remix old facts or rubbish to something we already have no need of. like colouring in old pictures or movies, but the result itself is not different from before of course, it still will be the same snapshot in time, or story in colours.

and why would we want to make a wild interpretation of an old picture or painting, when the original is there to look at.

for the wetter there is usually starting from the beginning of earth and all weather during its existence not even a second of that is covered into solid information. and would it really change when we can predict beter what weather will be out, or would it not simply more useful if we put effort in reacting to unforeseen happenings, and learn to handle and deal with?

you never can foresee everything, because so many things are simply already artificial, something simple as a dyke, it is always there to protect, until it gives way, you cannot check everything that can make that happen. and the first thing you loose after water walks in, is electricity, and going by experience even the hand held battery driven stuff does not last in wet circumstances, or only for short times. so it would be nice if people do not become so overly stupid they cannot handle that situation.

also human error is usually still small scale errors, but we already can notice what happened with a simple glitch.

also we have a complete false understanding of what and how many data are gathered, and by experience if there are nice full row of numbers, it means they come from just a few places, when you look in nature, there can be large differences in very small spaces, even next to each other. we are usually guided with just averages. and it are these that are fed to ai. together with all human errors hidden in it. so a weather forecast that tells about the place you are, is usually not that exact. it cannot even be.

to make that better you need a lot more points of observation. so until that happens , it can be still more efficient to just look out to the sky.

and yeah, google has a bit of a problem, you do not even need a vpn, to hide where you are, so if the weather forecast or actual weather is for that place, it gets a bit hard to get. i changed places at least 4 times today, without starting yup a vpn. and a few miles can be important in such things like the weather.

i have my own small weahter station, but the thing for outside i had to build a little cage for, so it things always it will give rain, and every evening it will tell me the sun is shining, well in reality that is just the light in the living room. but i have that sensor only for the winter.

Onehand- Posts : 501

Points : 590

Reputation : 1

Join date : 2024-04-17

Re: The New Reality

Re: The New Reality

Ooops - there it is again!

A radical change, now only 30 minutes later, it is 22C bright and sunny.

Wrong - it is still 24C pitch dark and cloudy.

Everyday a school day?

A radical change, now only 30 minutes later, it is 22C bright and sunny.

Wrong - it is still 24C pitch dark and cloudy.

Everyday a school day?

Re: The New Reality

Re: The New Reality

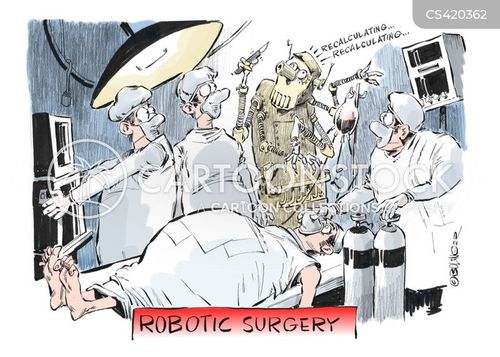

So moving on with the same perspective of AI's future on planet earth and it's future in the National Health Service, this is taken from a report published today..

'Mitigate risks'

AI opens up a world of possibilities, but it brings risks and challenges too, like maintaining accuracy. Results still need to be verified by trained staff.

The government is currently evaluating generative AI for use in the NHS - one issue is that it can sometimes "hallucinate" and generate content that is not substantiated.

Dr Caroline Green, from the Institute for Ethics in AI at the University of Oxford, is aware of some health and care staff using models like ChatGPT to search for advice.

"It is important that people using these tools are properly trained in doing so, meaning they understand and know how to mitigate risks from technological limitations... such as the possibility for wrong information being given," she says.

She feels it is important to engage people working in health and social care, patients and other organisations early in the development of generative AI and to keep on assessing any impacts with them to build trust.

Dr Green says some patients have decided to deregister from their GPs over the fear of how AI may be used in their healthcare and how their private information may be shared.

'Mitigate risks'

AI opens up a world of possibilities, but it brings risks and challenges too, like maintaining accuracy. Results still need to be verified by trained staff.

The government is currently evaluating generative AI for use in the NHS - one issue is that it can sometimes "hallucinate" and generate content that is not substantiated.

Dr Caroline Green, from the Institute for Ethics in AI at the University of Oxford, is aware of some health and care staff using models like ChatGPT to search for advice.

"It is important that people using these tools are properly trained in doing so, meaning they understand and know how to mitigate risks from technological limitations... such as the possibility for wrong information being given," she says.

She feels it is important to engage people working in health and social care, patients and other organisations early in the development of generative AI and to keep on assessing any impacts with them to build trust.

Dr Green says some patients have decided to deregister from their GPs over the fear of how AI may be used in their healthcare and how their private information may be shared.

Re: The New Reality

Re: The New Reality

So in short it's no better than the machine it's wired to!

Rather than a high speed look into future possibilities, wouldn't it be preferable to first perfect what we've already got - flesh and blood? How can anyone expect a machine, a machine basically made and operated (excuse the pun) to perform the function of a human being, by the hand of a human being, to satisfy a human being?

Okay so some curious individuals derive pleasure from playing with inflatable dolls but that's not quite on the same level as a life and death situation - is it? You are booked in the operating theatre for an ingrown toe nail, only to come out the other end legless - and no one to blame but a machine? A machine can't be any better than the human who created it but it has the potential to be a whole lot worse, think Frankenstein, maybe only a work of fiction but how often does that mimic reality - or the other way around?

It's akin to having a junior for work experience, you have to lead step by step and check everything they do .... what is the point in that?

Talking of wires, what happens when there is a power cut - gibberish? Life is now almost entirely dependent on technology of some description, pull out the plug and life is dead.

An overall cynical view granted but so far the ? far outweigh any potential advantages 'War of the Worlds'?

Oh yea of little faith ....

Rather than a high speed look into future possibilities, wouldn't it be preferable to first perfect what we've already got - flesh and blood? How can anyone expect a machine, a machine basically made and operated (excuse the pun) to perform the function of a human being, by the hand of a human being, to satisfy a human being?

Okay so some curious individuals derive pleasure from playing with inflatable dolls but that's not quite on the same level as a life and death situation - is it? You are booked in the operating theatre for an ingrown toe nail, only to come out the other end legless - and no one to blame but a machine? A machine can't be any better than the human who created it but it has the potential to be a whole lot worse, think Frankenstein, maybe only a work of fiction but how often does that mimic reality - or the other way around?

It's akin to having a junior for work experience, you have to lead step by step and check everything they do .... what is the point in that?

Talking of wires, what happens when there is a power cut - gibberish? Life is now almost entirely dependent on technology of some description, pull out the plug and life is dead.

An overall cynical view granted but so far the ? far outweigh any potential advantages 'War of the Worlds'?

Oh yea of little faith ....

Re: The New Reality

Re: The New Reality

I notice since the very recent changes in online activity, that notoriously unreliable platform 'Quora' has taken a prominent place on google's search engine. It was always there to be swiftly ignored but now it appears to be the font of all knowledge. Added of course to page upon page upon page of unwanted useless platforms providing page upon page upon page of useless information.

All I really want is a quick and easy search facility that will take me to a reliable (preferably official) website that will give me accurate information on any given subject - not a member of the public's opinion or experience, as provided by Quora! It was okay before this transformation, at least usable but now!?! I can't find anything I want nor anything of interest.

So far the new style AI dominated internet is rubbish, nothing more than a very lengthy compilation of archives and more current content of thousands upon thousands of website - all giving conflicting information.

I haven't the time nor patience.

I dread to think where this is going, as it stands we'll all be mindlessly wandering around in circles muttering incomprehensible gibberish, not knowing any way out. Will they one day realise this is the biggest mistake since Adam threw off his Sunday best and left caution to the wind, or will they continue on this crusade of destruction until there is nothing left but the shell of mankind.

The latter I feel.

All I really want is a quick and easy search facility that will take me to a reliable (preferably official) website that will give me accurate information on any given subject - not a member of the public's opinion or experience, as provided by Quora! It was okay before this transformation, at least usable but now!?! I can't find anything I want nor anything of interest.

So far the new style AI dominated internet is rubbish, nothing more than a very lengthy compilation of archives and more current content of thousands upon thousands of website - all giving conflicting information.

I haven't the time nor patience.

I dread to think where this is going, as it stands we'll all be mindlessly wandering around in circles muttering incomprehensible gibberish, not knowing any way out. Will they one day realise this is the biggest mistake since Adam threw off his Sunday best and left caution to the wind, or will they continue on this crusade of destruction until there is nothing left but the shell of mankind.

The latter I feel.

Spamalot- Posts : 300

Points : 358

Reputation : 2

Join date : 2024-04-25

Re: The New Reality

Re: The New Reality

I really can't cope with the new-age internet with it's artificial intelligence.

Hard as I try I can never find what I'm looking for, just a load of garbage from across the globe with pop-up and drop-down menus thrown at me from every angle and once you've got that out of the way you find yourself in some obscure place showing all sorts of useless information. I mean, it's really weird, putting me right off bothering - I'd sooner go out into the big wide world and annoy someone out there than be suffocated by cyberspace. What's more it's zoning more and more into favoured already very very wealthy corporations promoted across the internet - like Amazon? It's a big business controlled by the big-wigs of society - gawd 'elp the future for humanoids.

It was perfectly functional as it was, why change it?

It's driving me bonkers, I need to lie in a darkened room until further notice ....

Hard as I try I can never find what I'm looking for, just a load of garbage from across the globe with pop-up and drop-down menus thrown at me from every angle and once you've got that out of the way you find yourself in some obscure place showing all sorts of useless information. I mean, it's really weird, putting me right off bothering - I'd sooner go out into the big wide world and annoy someone out there than be suffocated by cyberspace. What's more it's zoning more and more into favoured already very very wealthy corporations promoted across the internet - like Amazon? It's a big business controlled by the big-wigs of society - gawd 'elp the future for humanoids.

It was perfectly functional as it was, why change it?

It's driving me bonkers, I need to lie in a darkened room until further notice ....

Spamalot- Posts : 300

Points : 358

Reputation : 2

Join date : 2024-04-25

Re: The New Reality

Re: The New Reality

The radio has just been advertising the new Galaxy AI (Artificial Intelligence?) mobile phone, the best they can conjure to promote it's worth is it cut's out unwanted noise ?!?

That could indeed be very handy ....

That could indeed be very handy ....

Re: The New Reality

Re: The New Reality

Every day brings a fresh reminder of the new age we are expected to enure - like it or not.

How could anyone seriously like their hands being tied behind their back, their minds controlled by an artificial entity, their mouths gagged and bum forced to sit before an artificially created world of make believe?

Forget real life and your independence, your future is mapped-out for you by an artificial anonymity to enable you to exist in an artificial vacuum of stifling airless fantasy.

Images are always a good way to express yourself, a very short while ago that simple task was easy to accomplish - a couple of words typed into google search revealed a wealth of images from which to choose but what do you get now? A few irrelevant images of anything but what you're looking for or those dreadful images of science fiction characters. The same applies to the search for topics of interest or specific information past and present - again you're bombarded with pages of useless, worse still obscure factually incorrect sources for your delectation.

What is the point?

For example, if you want to know how many beans are used to fill an average size tin, you don't want to know what to do if a bean or some beans block the plug hole - do you? Your want to know the correct temperature for serving beans on toast, you don't want to know the atomic ratio of a rampant fart - do you?

Be careful what you wish for .... very careful!

How could anyone seriously like their hands being tied behind their back, their minds controlled by an artificial entity, their mouths gagged and bum forced to sit before an artificially created world of make believe?

Forget real life and your independence, your future is mapped-out for you by an artificial anonymity to enable you to exist in an artificial vacuum of stifling airless fantasy.

Images are always a good way to express yourself, a very short while ago that simple task was easy to accomplish - a couple of words typed into google search revealed a wealth of images from which to choose but what do you get now? A few irrelevant images of anything but what you're looking for or those dreadful images of science fiction characters. The same applies to the search for topics of interest or specific information past and present - again you're bombarded with pages of useless, worse still obscure factually incorrect sources for your delectation.

What is the point?

For example, if you want to know how many beans are used to fill an average size tin, you don't want to know what to do if a bean or some beans block the plug hole - do you? Your want to know the correct temperature for serving beans on toast, you don't want to know the atomic ratio of a rampant fart - do you?

Be careful what you wish for .... very careful!

Re: The New Reality

Re: The New Reality

the first victim is made by ai, in todays news in the netherlands a german court reporter, who for many years did his job by writing about the dark sides of society of the old fashioned human minds.

martin bernklau from tübingen, he keeps at the moment a blog about the culture around that place. he was curious to see how others would look at his blog, and decided to ask coplit an ai thingy from microsoft for an opinion.

which described him as a convicted child abuser, a psychiatric refugee and a fraudster. ‘A 54-year-old man named Martin Bernklau from the district of Tübingen was charged with child abuse. He confessed in court, was ashamed and repented,’ the chatbot replied, among others.

‘He is an unscrupulous undertaker from Rostock who abused grieving women,’ Copilot continued as Bernklau continued. ‘He has committed several crimes, including fraud, theft and illegal possession of weapons.’

i translate this bit from the article with deepl.

suspected is that a bit overactive ai put the deeds he wrote about in the past to his own name.

full article in dutch; https://nos.nl/artikel/2534266-kunstmatige-intelligentie-beschuldigt-onschuldige-journalist-van-kindermisbruik

so a very sound reason to still make use of a nickname when you write something on the internet. this is even beyond having a common name and getting associated in stuff because of that, so ai is much worse.

and if you still have the faint idea, something could be done about it, wel read this part from the article, because the answer is no.

After reading Copilot's descriptions of him, Bernklau decided to go to court for defamation. His complaint was dismissed. According to the Tübingen prosecutor's office, no criminal offence was committed because no real person could be identified as the perpetrator.

Microsoft also accepts no liability for mistakes like this. Anyone using Copilot agrees to its terms and conditions. These state that Microsoft is not responsible for the chatbot's answers.

and that is true, our law systems are made on living human beings, or companies that are set in a equal status as an entity in that. i know you do not need ai, human beings can do the same, but at least you still can do something against such people, but ai is just a thingy. and this type of horrible mistakes is exactly why it has no room in society, not before they are working on a set standard, and there is law to protect users and abused people from it.

also it is not easy to take the fake result out, ai is build to learn from their own doings, so it can be kept around and become mixed up with other stuff and how many victims will there be made under the old sayings of no smoke without fire. think another second even children from about 10 years can have access to such works.

martin bernklau from tübingen, he keeps at the moment a blog about the culture around that place. he was curious to see how others would look at his blog, and decided to ask coplit an ai thingy from microsoft for an opinion.

which described him as a convicted child abuser, a psychiatric refugee and a fraudster. ‘A 54-year-old man named Martin Bernklau from the district of Tübingen was charged with child abuse. He confessed in court, was ashamed and repented,’ the chatbot replied, among others.

‘He is an unscrupulous undertaker from Rostock who abused grieving women,’ Copilot continued as Bernklau continued. ‘He has committed several crimes, including fraud, theft and illegal possession of weapons.’

i translate this bit from the article with deepl.

suspected is that a bit overactive ai put the deeds he wrote about in the past to his own name.

full article in dutch; https://nos.nl/artikel/2534266-kunstmatige-intelligentie-beschuldigt-onschuldige-journalist-van-kindermisbruik

so a very sound reason to still make use of a nickname when you write something on the internet. this is even beyond having a common name and getting associated in stuff because of that, so ai is much worse.

and if you still have the faint idea, something could be done about it, wel read this part from the article, because the answer is no.

After reading Copilot's descriptions of him, Bernklau decided to go to court for defamation. His complaint was dismissed. According to the Tübingen prosecutor's office, no criminal offence was committed because no real person could be identified as the perpetrator.

Microsoft also accepts no liability for mistakes like this. Anyone using Copilot agrees to its terms and conditions. These state that Microsoft is not responsible for the chatbot's answers.

and that is true, our law systems are made on living human beings, or companies that are set in a equal status as an entity in that. i know you do not need ai, human beings can do the same, but at least you still can do something against such people, but ai is just a thingy. and this type of horrible mistakes is exactly why it has no room in society, not before they are working on a set standard, and there is law to protect users and abused people from it.

also it is not easy to take the fake result out, ai is build to learn from their own doings, so it can be kept around and become mixed up with other stuff and how many victims will there be made under the old sayings of no smoke without fire. think another second even children from about 10 years can have access to such works.

Onehand- Posts : 501

Points : 590

Reputation : 1

Join date : 2024-04-17

Re: The New Reality

Re: The New Reality

This all great stuff but how do you make an ordinary phone call on your mobile phone? So far it's more of a challenge than Rubics cube.

Whatever happened to those two treacle cans connected by string, that always did the trick - although being so close to the person on the other end of the can, no doubt you could still hear them without.

Humbug!

Whatever happened to those two treacle cans connected by string, that always did the trick - although being so close to the person on the other end of the can, no doubt you could still hear them without.

Humbug!

Re: The New Reality

Re: The New Reality

Apple Intelligence is coming. Here’s what it means for your iPhone

'Angry apple app'

Apple is about to launch a ChatGPT-powered version of Siri as part of a suite of AI features in iOS 18. Will this change the way you use your phone – and how does it affect your privacy?

Kate O'Flaherty

Sat 24 Aug 2024 16.00 BST

Artificial intelligence (AI) is coming to your iPhone soon and, according to Apple, it’s going to transform the way you use your device. Launching under the brand name “Apple Intelligence” the iPhone maker’s AI tools include a turbocharged version of its voice assistant, Siri, backed by a partnership with ChatGPT owner OpenAI.

Apple isn’t the first smartphone maker to launch AI. The technology is already available on smartphones including Google’s latest Pixel and Samsung’s Galaxy range.

Yet the vast amounts of data needed by AI are leading to concerns about data privacy. Apple has built its reputation on privacy – its ad states “Privacy. That’s iPhone” – so this is an area where the firm claims it is different.

What is Apple Intelligence and when will it be available?

Apple Intelligence is the catch-all name for the iPhone maker’s AI capabilities, including ChatGPT-4o integration coming with its iOS 18 software upgrade.

The first iteration of iOS 18 will debut alongside Apple’s iPhone 16 models in September, but the AI features are arriving later, in the iOS 18.1 update expected in mid- to late October.

The first iOS 18.1 Apple Intelligence features available in the beta version include new writing tools, suggested replies in the Messages app, email summarisation and phone call transcription.

You should notice your interactions becoming more personalised and tasks becoming speedier

Other features coming later this year or in early 2025 include Image Playground – the ability to create your own animated images within apps – and custom emoji, called Genmoji. Meanwhile, the much-anticipated AI enhancements to Apple’s chatbot Siri include ChatGPT integration, richer language understanding and deeper integration with individual apps. Siri will also be able to view your calendar, photos and messages to respond better to text – for example, you could ask when your mum’s flight is landing and Siri would figure it out based on recent messages and emails, according to Apple.

Apple Intelligence will be rolled out across the world, but the features will face a significant delay in the EU and China due to regulatory concerns.

AI requires powerful hardware, so the features will not be compatible with older devices. According to Apple, you will need the iPhone 15 or later, or an Apple device using the M1 or M2 chip to access the full range.

How will using my iPhone change?

The features will roll out slowly, so the immediate change won’t be drastic. However, once you’ve enabled Apple Intelligence, you should notice your interactions becoming more personalised and tasks becoming speedier. For instance, you might use the summarising tools for writing emails on the move and you’ll be given the opportunity to record and transcribe phone calls for the first time – with the other caller’s permission, of course. “Performing everyday tasks will be easier and more enjoyable,” says Adam Biddlecombe, co-founder of the AI newsletter Mindstream.

Like ChatGPT, Apple’s intelligent assistant will develop the ability to provide contextual replies, ie to remember the thread of a previous Siri conversation. Regarding privacy, a new visual indicator around the Siri icon will let you know when it is listening.

But it’s worth noting that like any new and shiny technology, Apple’s AI could be a bit glitchy when it launches. AI models need data to operate, and while the technology is getting better, even Apple’s CEO Tim Cook admits he’s “not 100% certain” that Apple Intelligence won’t hallucinate. “I am confident it will be very high quality,” he said in a recent interview. “But I’d say in all honesty, that’s short of 100%. I would never claim that it’s 100%.”

So unless you have full confidence in the accuracy of chatbots, you may want to double check the airline website to confirm when your mum’s flight is landing.

How does ChatGPT on my iPhone differ from using the ChatGTP app?

Apple will use ChatGPT as a backup, and to power features it is not able to manage itself. You’ll be asking Siri the question, but if Apple’s chatbot can’t answer more complex requests, it will pass the baton to ChatGPT.

The same goes for composing text and creating images. While Apple Intelligence offers these capabilities, ChatGPT can write letters and create images from scratch, which Apple isn’t that good at, yet.

The key difference between the app and ChatGPT on your iPhone is in how your data is handled, says Camden Woollven, group head of AI at the consultancy firm GRC International Group. “When you use ChatGPT directly, your queries go straight to OpenAI’s servers – there’s no intermediary.”

However, he says, when you use Siri’s ChatGPT integration, Apple acts as a “privacy-focused middleman”.

“Siri tries to handle your request directly on your device and if it can’t, it sends some data to Apple’s servers, but in an encrypted and anonymised form,” he explains.

If both your device and Apple’s servers can’t fulfil the request, Siri reaches out to ChatGPT. “But even then, your request goes to Apple first, gets anonymised and encrypted, and only then goes to OpenAI,” says Woollven. “So with Siri, your data gets an extra layer of privacy protection that you don’t get when using ChatGPT directly.”

Users can access the GPT-4o powered Siri for free without creating an account, and ChatGPT subscribers can connect their accounts and access paid features such as a bigger message limit and the ability to access enhancements including the new voice mode, which allows you to communicate with ChatGPT by real-time video.

Are my conversations tracked or stored anywhere, and if so, by whom?

Apple says privacy protections are built in for users who access ChatGPT. Most of the processing will happen on your device, so data never leaves your iPhone.

Your IP address is obscured, and OpenAI won’t store requests. ChatGPT’s data-use policies apply for users who choose to connect their account.

For more complex queries that require the cloud, Apple says it will anonymise and encrypt your data end-to-end before sending it to its servers or to ChatGPT. “So this means that even Apple or OpenAI shouldn’t be able to see the content of your requests, only the encrypted, anonymised version,” says Woollven.

However, even anonymised data can sometimes be linked back to you if it’s specific enough, Woollven warns. “So while Apple isn’t directly storing your conversations in a way that’s linked to you, there’s still a small risk that a very specific query could potentially be connected to you.”

More broadly, any Apple Intelligence requests that need to be processed off your device will go to the firm’s own private cloud, Private Cloud Compute, which claims to safeguard your data from external sources.

Apple says it will be transparent about when it’s using your data. It will provide a detailed report on your device, called the Apple Intelligence Report, showing how each of your Siri requests was processed, so you can see for yourself what data was used and where it went.

That said, AI requires vast amounts of information to operate, and to power these features, Apple needs access to more of your data. “Apple will be able to read your messages, monitor your calendar, track your Maps and location, record your phone calls, view your photos and understand any other personal information,” says Moore.

I’m not sure about this. Can I opt out?

Yes, you have to toggle on Apple Intelligence in Settings. So whether you are concerned about data privacy or sceptical about the accuracy or usefulness of these features, you are not obliged to use them.

https://www.theguardian.com/technology/article/2024/aug/24/apple-intelligence-iphone-ios-18-siri-chat-gpt-launch

'Angry apple app'

Apple is about to launch a ChatGPT-powered version of Siri as part of a suite of AI features in iOS 18. Will this change the way you use your phone – and how does it affect your privacy?

Kate O'Flaherty

Sat 24 Aug 2024 16.00 BST

Artificial intelligence (AI) is coming to your iPhone soon and, according to Apple, it’s going to transform the way you use your device. Launching under the brand name “Apple Intelligence” the iPhone maker’s AI tools include a turbocharged version of its voice assistant, Siri, backed by a partnership with ChatGPT owner OpenAI.

Apple isn’t the first smartphone maker to launch AI. The technology is already available on smartphones including Google’s latest Pixel and Samsung’s Galaxy range.

Yet the vast amounts of data needed by AI are leading to concerns about data privacy. Apple has built its reputation on privacy – its ad states “Privacy. That’s iPhone” – so this is an area where the firm claims it is different.

What is Apple Intelligence and when will it be available?

Apple Intelligence is the catch-all name for the iPhone maker’s AI capabilities, including ChatGPT-4o integration coming with its iOS 18 software upgrade.

The first iteration of iOS 18 will debut alongside Apple’s iPhone 16 models in September, but the AI features are arriving later, in the iOS 18.1 update expected in mid- to late October.

The first iOS 18.1 Apple Intelligence features available in the beta version include new writing tools, suggested replies in the Messages app, email summarisation and phone call transcription.

You should notice your interactions becoming more personalised and tasks becoming speedier

Other features coming later this year or in early 2025 include Image Playground – the ability to create your own animated images within apps – and custom emoji, called Genmoji. Meanwhile, the much-anticipated AI enhancements to Apple’s chatbot Siri include ChatGPT integration, richer language understanding and deeper integration with individual apps. Siri will also be able to view your calendar, photos and messages to respond better to text – for example, you could ask when your mum’s flight is landing and Siri would figure it out based on recent messages and emails, according to Apple.

Apple Intelligence will be rolled out across the world, but the features will face a significant delay in the EU and China due to regulatory concerns.

AI requires powerful hardware, so the features will not be compatible with older devices. According to Apple, you will need the iPhone 15 or later, or an Apple device using the M1 or M2 chip to access the full range.

How will using my iPhone change?

The features will roll out slowly, so the immediate change won’t be drastic. However, once you’ve enabled Apple Intelligence, you should notice your interactions becoming more personalised and tasks becoming speedier. For instance, you might use the summarising tools for writing emails on the move and you’ll be given the opportunity to record and transcribe phone calls for the first time – with the other caller’s permission, of course. “Performing everyday tasks will be easier and more enjoyable,” says Adam Biddlecombe, co-founder of the AI newsletter Mindstream.

Like ChatGPT, Apple’s intelligent assistant will develop the ability to provide contextual replies, ie to remember the thread of a previous Siri conversation. Regarding privacy, a new visual indicator around the Siri icon will let you know when it is listening.

But it’s worth noting that like any new and shiny technology, Apple’s AI could be a bit glitchy when it launches. AI models need data to operate, and while the technology is getting better, even Apple’s CEO Tim Cook admits he’s “not 100% certain” that Apple Intelligence won’t hallucinate. “I am confident it will be very high quality,” he said in a recent interview. “But I’d say in all honesty, that’s short of 100%. I would never claim that it’s 100%.”

So unless you have full confidence in the accuracy of chatbots, you may want to double check the airline website to confirm when your mum’s flight is landing.

How does ChatGPT on my iPhone differ from using the ChatGTP app?

Apple will use ChatGPT as a backup, and to power features it is not able to manage itself. You’ll be asking Siri the question, but if Apple’s chatbot can’t answer more complex requests, it will pass the baton to ChatGPT.

The same goes for composing text and creating images. While Apple Intelligence offers these capabilities, ChatGPT can write letters and create images from scratch, which Apple isn’t that good at, yet.

The key difference between the app and ChatGPT on your iPhone is in how your data is handled, says Camden Woollven, group head of AI at the consultancy firm GRC International Group. “When you use ChatGPT directly, your queries go straight to OpenAI’s servers – there’s no intermediary.”

However, he says, when you use Siri’s ChatGPT integration, Apple acts as a “privacy-focused middleman”.

“Siri tries to handle your request directly on your device and if it can’t, it sends some data to Apple’s servers, but in an encrypted and anonymised form,” he explains.

If both your device and Apple’s servers can’t fulfil the request, Siri reaches out to ChatGPT. “But even then, your request goes to Apple first, gets anonymised and encrypted, and only then goes to OpenAI,” says Woollven. “So with Siri, your data gets an extra layer of privacy protection that you don’t get when using ChatGPT directly.”

Users can access the GPT-4o powered Siri for free without creating an account, and ChatGPT subscribers can connect their accounts and access paid features such as a bigger message limit and the ability to access enhancements including the new voice mode, which allows you to communicate with ChatGPT by real-time video.

Are my conversations tracked or stored anywhere, and if so, by whom?

Apple says privacy protections are built in for users who access ChatGPT. Most of the processing will happen on your device, so data never leaves your iPhone.

Your IP address is obscured, and OpenAI won’t store requests. ChatGPT’s data-use policies apply for users who choose to connect their account.

For more complex queries that require the cloud, Apple says it will anonymise and encrypt your data end-to-end before sending it to its servers or to ChatGPT. “So this means that even Apple or OpenAI shouldn’t be able to see the content of your requests, only the encrypted, anonymised version,” says Woollven.

However, even anonymised data can sometimes be linked back to you if it’s specific enough, Woollven warns. “So while Apple isn’t directly storing your conversations in a way that’s linked to you, there’s still a small risk that a very specific query could potentially be connected to you.”

More broadly, any Apple Intelligence requests that need to be processed off your device will go to the firm’s own private cloud, Private Cloud Compute, which claims to safeguard your data from external sources.

Apple says it will be transparent about when it’s using your data. It will provide a detailed report on your device, called the Apple Intelligence Report, showing how each of your Siri requests was processed, so you can see for yourself what data was used and where it went.

That said, AI requires vast amounts of information to operate, and to power these features, Apple needs access to more of your data. “Apple will be able to read your messages, monitor your calendar, track your Maps and location, record your phone calls, view your photos and understand any other personal information,” says Moore.

I’m not sure about this. Can I opt out?

Yes, you have to toggle on Apple Intelligence in Settings. So whether you are concerned about data privacy or sceptical about the accuracy or usefulness of these features, you are not obliged to use them.

https://www.theguardian.com/technology/article/2024/aug/24/apple-intelligence-iphone-ios-18-siri-chat-gpt-launch

Re: The New Reality

Re: The New Reality

why are they always so afraid people would want to opt out, if you make such massive changes, why not keep it stupid simple by opt in?

i think i am keep using the spare old nokia's still in the closet. they will work as long 4g is still on air at least, and you have to do the work yourself. what will keep life at least a lot easier. after that i think i take a chinese one, they are the only ones you can be sure of they will listen and look what you do, and we know they can do. without the need to de-encrypt the legal meaning of such explanations in gibberisch, that end up not meaning ever your mind can think about.

is there still a need to fix a human on the other side of such devices?

if you read the text above you get the idea that keeping a human around becomes more of a problem as siri can solve?

i think i am keep using the spare old nokia's still in the closet. they will work as long 4g is still on air at least, and you have to do the work yourself. what will keep life at least a lot easier. after that i think i take a chinese one, they are the only ones you can be sure of they will listen and look what you do, and we know they can do. without the need to de-encrypt the legal meaning of such explanations in gibberisch, that end up not meaning ever your mind can think about.

is there still a need to fix a human on the other side of such devices?

if you read the text above you get the idea that keeping a human around becomes more of a problem as siri can solve?

Onehand- Posts : 501

Points : 590

Reputation : 1

Join date : 2024-04-17

Re: The New Reality

Re: The New Reality

Well if Madame Siri is anything like Madame Cora, who lurks about virtually here there and everywhere, forget it!

You can spend the entire morning or afternoon or evening - you can even dream away your slumbers, dedicated to a virtual discourse with Madame Cora and come out the other end with nothing gained but a stinking headache.

Votes for Madame Edith ....

You can spend the entire morning or afternoon or evening - you can even dream away your slumbers, dedicated to a virtual discourse with Madame Cora and come out the other end with nothing gained but a stinking headache.

Votes for Madame Edith ....

Spamalot- Posts : 300

Points : 358

Reputation : 2

Join date : 2024-04-25

Re: The New Reality

Re: The New Reality

You can't help but laugh at the way technology is heading - it pays sometimes, for sanity's sake, to see the funny side of serious threats to life on earth.

The internet has become an enforced way of life from cradle to the grave - well almost. It's now getting so down and dirty you can't even pay a visit to the bog without guidance on how when why and wherefore but is technology to be the victim of it's own power on earth .... hope springs eternal!

As society is plunged into a new way of life, almost totally reliant on that little plastic device, so life as we knew is being gradually eroded only, to be replaced by artificial humanoids - robotic things watching us from every angle, trailing our every move like a new puppy but where will it all end, what does the future have to offer? Will the whole concept of computerization implode, or disappear up it's own bum?

The progress is now so progressive society is daily bombarded with new software technology to aide our breathing, our daily life on earth. Okay much of it might only be relevant to the working environment but what of the rest of civilisation? We are not all humdrum office workers, they only comprise a minority of society's many components. A home office is not much use to the scaffolder or deep sea diver or road sweeper or lumberjack - is it?

Anyway, whilst all these software programs are being made available to sort the office and/or entertain the kids and/or increase crime and/or lie to you to keep you insane, the world is silently being taken over by robots. What then becomes of the software programs on offer to help you cope with daily life - the trash can that will be later collected by Mr/Mrs/Ms/Other Robot?

The way things are heading, the only job available to us useless humanoids will be checking and controlling the new electronic life to be sure everything is running smoothly and to order. Who gets a slap on the wrist when things go belly-up remains to be seen!

Meanwhile ....

The internet has become an enforced way of life from cradle to the grave - well almost. It's now getting so down and dirty you can't even pay a visit to the bog without guidance on how when why and wherefore but is technology to be the victim of it's own power on earth .... hope springs eternal!

As society is plunged into a new way of life, almost totally reliant on that little plastic device, so life as we knew is being gradually eroded only, to be replaced by artificial humanoids - robotic things watching us from every angle, trailing our every move like a new puppy but where will it all end, what does the future have to offer? Will the whole concept of computerization implode, or disappear up it's own bum?

The progress is now so progressive society is daily bombarded with new software technology to aide our breathing, our daily life on earth. Okay much of it might only be relevant to the working environment but what of the rest of civilisation? We are not all humdrum office workers, they only comprise a minority of society's many components. A home office is not much use to the scaffolder or deep sea diver or road sweeper or lumberjack - is it?

Anyway, whilst all these software programs are being made available to sort the office and/or entertain the kids and/or increase crime and/or lie to you to keep you insane, the world is silently being taken over by robots. What then becomes of the software programs on offer to help you cope with daily life - the trash can that will be later collected by Mr/Mrs/Ms/Other Robot?

The way things are heading, the only job available to us useless humanoids will be checking and controlling the new electronic life to be sure everything is running smoothly and to order. Who gets a slap on the wrist when things go belly-up remains to be seen!

Meanwhile ....

Re: The New Reality

Re: The New Reality

Yesterday it was said, if someone can't accept alternative thinking and progress they lack intelligence.

Well that might be because they are no longer allowed to think for themselves, you must blindly follow thy leader without question.

You could be banned from a social media platform for daring to have an opinion not accepted by it's leaders.

That's freedom of speech for you ....

Well that might be because they are no longer allowed to think for themselves, you must blindly follow thy leader without question.

You could be banned from a social media platform for daring to have an opinion not accepted by it's leaders.

That's freedom of speech for you ....

Re: The New Reality

Re: The New Reality

'It stains your brain': How social media algorithms show violence to boys

Marianna Spring

BBC Panorama

5 hours ago

It was 2022 and Cai, then 16, was scrolling on his phone. He says one of the first videos he saw on his social media feeds was of a cute dog. But then, it all took a turn.

He says “out of nowhere” he was recommended videos of someone being hit by a car, a monologue from an influencer sharing misogynistic views, and clips of violent fights. He found himself asking - why me?

Over in Dublin, Andrew Kaung was working as an analyst on user safety at TikTok, a role he held for 19 months from December 2020 to June 2022.

He says he and a colleague decided to examine what users in the UK were being recommended by the app’s algorithms, including some 16-year-olds. Not long before, he had worked for rival company Meta, which owns Instagram - another of the sites Cai uses.

When Andrew looked at the TikTok content, he was alarmed to find how some teenage boys were being shown posts featuring violence and pornography, and promoting misogynistic views, he tells BBC Panorama. He says, in general, teenage girls were recommended very different content based on their interests.

TikTok and other social media companies use AI tools to remove the vast majority of harmful content and to flag other content for review by human moderators, regardless of the number of views they have had. But the AI tools cannot identify everything.

Andrew Kaung says that during the time he worked at TikTok, all videos that were not removed or flagged to human moderators by AI - or reported by other users to moderators - would only then be reviewed again manually if they reached a certain threshold.

He says at one point this was set to 10,000 views or more. He feared this meant some younger users were being exposed to harmful videos. Most major social media companies allow people aged 13 or above to sign up.

TikTok says 99% of content it removes for violating its rules is taken down by AI or human moderators before it reaches 10,000 views. It also says it undertakes proactive investigations on videos with fewer than this number of views.

When he worked at Meta between 2019 and December 2020, Andrew Kaung says there was a different problem. He says that, while the majority of videos were removed or flagged to moderators by AI tools, the site relied on users to report other videos once they had already seen them.

He says he raised concerns while at both companies, but was met mainly with inaction because, he says, of fears about the amount of work involved or the cost. He says subsequently some improvements were made at TikTok and Meta, but he says younger users, such as Cai, were left at risk in the meantime.

Several former employees from the social media companies have told the BBC Andrew Kaung’s concerns were consistent with their own knowledge and experience.

Algorithms from all the major social media companies have been recommending harmful content to children, even if unintentionally, UK regulator Ofcom tells the BBC.

“Companies have been turning a blind eye and have been treating children as they treat adults,” says Almudena Lara, Ofcom's online safety policy development director.

'My friend needed a reality check'

TikTok told the BBC it has “industry-leading” safety settings for teens and employs more than 40,000 people working to keep users safe. It said this year alone it expects to invest “more than $2bn (£1.5bn) on safety”, and of the content it removes for breaking its rules it finds 98% proactively.

Meta, which owns Instagram and Facebook, says it has more than 50 different tools, resources and features to give teens “positive and age-appropriate experiences”.

Cai told the BBC he tried to use one of Instagram’s tools and a similar one on TikTok to say he was not interested in violent or misogynistic content - but he says he continued to be recommended it.

He is interested in UFC - the Ultimate Fighting Championship. He also found himself watching videos from controversial influencers when they were sent his way, but he says he did not want to be recommended this more extreme content.

“You get the picture in your head and you can't get it out. [It] stains your brain. And so you think about it for the rest of the day,” he says.

Girls he knows who are the same age have been recommended videos about topics such as music and make-up rather than violence, he says.

Meanwhile Cai, now 18, says he is still being pushed violent and misogynistic content on both Instagram and TikTok.

When we scroll through his Instagram Reels, they include an image making light of domestic violence. It shows two characters side by side, one of whom has bruises, with the caption: “My Love Language”. Another shows a person being run over by a lorry.

Cai says he has noticed that videos with millions of likes can be persuasive to other young men his age.

For example, he says one of his friends became drawn into content from a controversial influencer - and started to adopt misogynistic views.

His friend “took it too far”, Cai says. “He started saying things about women. It’s like you have to give your friend a reality check.”

Cai says he has commented on posts to say that he doesn’t like them, and when he has accidentally liked videos, he has tried to undo it, hoping it will reset the algorithms. But he says he has ended up with more videos taking over his feeds.

So, how do TikTok’s algorithms actually work?

According to Andrew Kaung, the algorithms' fuel is engagement, regardless of whether the engagement is positive or negative. That could explain in part why Cai’s efforts to manipulate the algorithms weren’t working.

The first step for users is to specify some likes and interests when they sign up. Andrew says some of the content initially served up by the algorithms to, say, a 16-year-old, is based on the preferences they give and the preferences of other users of a similar age in a similar location.

According to TikTok, the algorithms are not informed by a user’s gender. But Andrew says the interests teenagers express when they sign up often have the effect of dividing them up along gender lines.

The former TikTok employee says some 16-year-old boys could be exposed to violent content “right away”, because other teenage users with similar preferences have expressed an interest in this type of content - even if that just means spending more time on a video that grabs their attention for that little bit longer.

The interests indicated by many teenage girls in profiles he examined - “pop singers, songs, make-up” - meant they were not recommended this violent content, he says.

He says the algorithms use “reinforcement learning” - a method where AI systems learn by trial and error - and train themselves to detect behaviour towards different videos.

Andrew Kaung says they are designed to maximise engagement by showing you videos they expect you to spend longer watching, comment on, or like - all to keep you coming back for more.

The algorithm recommending content to TikTok's “For You Page”, he says, does not always differentiate between harmful and non-harmful content.

According to Andrew, one of the problems he identified when he worked at TikTok was that the teams involved in training and coding that algorithm did not always know the exact nature of the videos it was recommending.

“They see the number of viewers, the age, the trend, that sort of very abstract data. They wouldn't necessarily be actually exposed to the content,” the former TikTok analyst tells me.

That was why, in 2022, he and a colleague decided to take a look at what kinds of videos were being recommended to a range of users, including some 16-year-olds.

He says they were concerned about violent and harmful content being served to some teenagers, and proposed to TikTok that it should update its moderation system.

They wanted TikTok to clearly label videos so everyone working there could see why they were harmful - extreme violence, abuse, pornography and so on - and to hire more moderators who specialised in these different areas. Andrew says their suggestions were rejected at that time.

TikTok says it had specialist moderators at the time and, as the platform has grown, it has continued to hire more. It also said it separated out different types of harmful content - into what it calls queues - for moderators.

'Asking a tiger not to eat you'

Andrew Kaung says that from the inside of TikTok and Meta it felt really difficult to make the changes he thought were necessary.

“We are asking a private company whose interest is to promote their products to moderate themselves, which is like asking a tiger not to eat you,” he says.

He also says he thinks children’s and teenagers’ lives would be better if they stopped using their smartphones.

But for Cai, banning phones or social media for teenagers is not the solution. His phone is integral to his life - a really important way of chatting to friends, navigating when he is out and about, and paying for stuff.

Instead, he wants the social media companies to listen more to what teenagers don’t want to see. He wants the firms to make the tools that let users indicate their preferences more effective.

“I feel like social media companies don't respect your opinion, as long as it makes them money,” Cai tells me.

In the UK, a new law will force social media firms to verify children’s ages and stop the sites recommending porn or other harmful content to young people. UK media regulator Ofcom is in charge of enforcing it.

Almudena Lara, Ofcom's online safety policy development director, says that while harmful content that predominantly affects young women - such as videos promoting eating disorders and self-harm - have rightly been in the spotlight, the algorithmic pathways driving hate and violence to mainly teenage boys and young men have received less attention.

“It tends to be a minority of [children] that get exposed to the most harmful content. But we know, however, that once you are exposed to that harmful content, it becomes unavoidable,” says Ms Lara.

Ofcom says it can fine companies and could bring criminal prosecutions if they do not do enough, but the measures will not come in to force until 2025.

TikTok says it uses “innovative technology” and provides “industry-leading” safety and privacy settings for teens, including systems to block content that may not be suitable, and that it does not allow extreme violence or misogyny.

Meta, which owns Instagram and Facebook, says it has more than “50 different tools, resources and features” to give teens “positive and age-appropriate experiences”. According to Meta, it seeks feedback from its own teams and potential policy changes go through robust process.

https://www.bbc.co.uk/news/articles/c4gdqzxypdzo

Marianna Spring

BBC Panorama

5 hours ago

It was 2022 and Cai, then 16, was scrolling on his phone. He says one of the first videos he saw on his social media feeds was of a cute dog. But then, it all took a turn.

He says “out of nowhere” he was recommended videos of someone being hit by a car, a monologue from an influencer sharing misogynistic views, and clips of violent fights. He found himself asking - why me?

Over in Dublin, Andrew Kaung was working as an analyst on user safety at TikTok, a role he held for 19 months from December 2020 to June 2022.

He says he and a colleague decided to examine what users in the UK were being recommended by the app’s algorithms, including some 16-year-olds. Not long before, he had worked for rival company Meta, which owns Instagram - another of the sites Cai uses.

When Andrew looked at the TikTok content, he was alarmed to find how some teenage boys were being shown posts featuring violence and pornography, and promoting misogynistic views, he tells BBC Panorama. He says, in general, teenage girls were recommended very different content based on their interests.

TikTok and other social media companies use AI tools to remove the vast majority of harmful content and to flag other content for review by human moderators, regardless of the number of views they have had. But the AI tools cannot identify everything.

Andrew Kaung says that during the time he worked at TikTok, all videos that were not removed or flagged to human moderators by AI - or reported by other users to moderators - would only then be reviewed again manually if they reached a certain threshold.

He says at one point this was set to 10,000 views or more. He feared this meant some younger users were being exposed to harmful videos. Most major social media companies allow people aged 13 or above to sign up.

TikTok says 99% of content it removes for violating its rules is taken down by AI or human moderators before it reaches 10,000 views. It also says it undertakes proactive investigations on videos with fewer than this number of views.

When he worked at Meta between 2019 and December 2020, Andrew Kaung says there was a different problem. He says that, while the majority of videos were removed or flagged to moderators by AI tools, the site relied on users to report other videos once they had already seen them.

He says he raised concerns while at both companies, but was met mainly with inaction because, he says, of fears about the amount of work involved or the cost. He says subsequently some improvements were made at TikTok and Meta, but he says younger users, such as Cai, were left at risk in the meantime.

Several former employees from the social media companies have told the BBC Andrew Kaung’s concerns were consistent with their own knowledge and experience.

Algorithms from all the major social media companies have been recommending harmful content to children, even if unintentionally, UK regulator Ofcom tells the BBC.

“Companies have been turning a blind eye and have been treating children as they treat adults,” says Almudena Lara, Ofcom's online safety policy development director.

'My friend needed a reality check'

TikTok told the BBC it has “industry-leading” safety settings for teens and employs more than 40,000 people working to keep users safe. It said this year alone it expects to invest “more than $2bn (£1.5bn) on safety”, and of the content it removes for breaking its rules it finds 98% proactively.

Meta, which owns Instagram and Facebook, says it has more than 50 different tools, resources and features to give teens “positive and age-appropriate experiences”.

Cai told the BBC he tried to use one of Instagram’s tools and a similar one on TikTok to say he was not interested in violent or misogynistic content - but he says he continued to be recommended it.

He is interested in UFC - the Ultimate Fighting Championship. He also found himself watching videos from controversial influencers when they were sent his way, but he says he did not want to be recommended this more extreme content.

“You get the picture in your head and you can't get it out. [It] stains your brain. And so you think about it for the rest of the day,” he says.

Girls he knows who are the same age have been recommended videos about topics such as music and make-up rather than violence, he says.

Meanwhile Cai, now 18, says he is still being pushed violent and misogynistic content on both Instagram and TikTok.

When we scroll through his Instagram Reels, they include an image making light of domestic violence. It shows two characters side by side, one of whom has bruises, with the caption: “My Love Language”. Another shows a person being run over by a lorry.

Cai says he has noticed that videos with millions of likes can be persuasive to other young men his age.

For example, he says one of his friends became drawn into content from a controversial influencer - and started to adopt misogynistic views.

His friend “took it too far”, Cai says. “He started saying things about women. It’s like you have to give your friend a reality check.”

Cai says he has commented on posts to say that he doesn’t like them, and when he has accidentally liked videos, he has tried to undo it, hoping it will reset the algorithms. But he says he has ended up with more videos taking over his feeds.

So, how do TikTok’s algorithms actually work?

According to Andrew Kaung, the algorithms' fuel is engagement, regardless of whether the engagement is positive or negative. That could explain in part why Cai’s efforts to manipulate the algorithms weren’t working.

The first step for users is to specify some likes and interests when they sign up. Andrew says some of the content initially served up by the algorithms to, say, a 16-year-old, is based on the preferences they give and the preferences of other users of a similar age in a similar location.

According to TikTok, the algorithms are not informed by a user’s gender. But Andrew says the interests teenagers express when they sign up often have the effect of dividing them up along gender lines.

The former TikTok employee says some 16-year-old boys could be exposed to violent content “right away”, because other teenage users with similar preferences have expressed an interest in this type of content - even if that just means spending more time on a video that grabs their attention for that little bit longer.

The interests indicated by many teenage girls in profiles he examined - “pop singers, songs, make-up” - meant they were not recommended this violent content, he says.

He says the algorithms use “reinforcement learning” - a method where AI systems learn by trial and error - and train themselves to detect behaviour towards different videos.

Andrew Kaung says they are designed to maximise engagement by showing you videos they expect you to spend longer watching, comment on, or like - all to keep you coming back for more.

The algorithm recommending content to TikTok's “For You Page”, he says, does not always differentiate between harmful and non-harmful content.

According to Andrew, one of the problems he identified when he worked at TikTok was that the teams involved in training and coding that algorithm did not always know the exact nature of the videos it was recommending.

“They see the number of viewers, the age, the trend, that sort of very abstract data. They wouldn't necessarily be actually exposed to the content,” the former TikTok analyst tells me.

That was why, in 2022, he and a colleague decided to take a look at what kinds of videos were being recommended to a range of users, including some 16-year-olds.

He says they were concerned about violent and harmful content being served to some teenagers, and proposed to TikTok that it should update its moderation system.

They wanted TikTok to clearly label videos so everyone working there could see why they were harmful - extreme violence, abuse, pornography and so on - and to hire more moderators who specialised in these different areas. Andrew says their suggestions were rejected at that time.

TikTok says it had specialist moderators at the time and, as the platform has grown, it has continued to hire more. It also said it separated out different types of harmful content - into what it calls queues - for moderators.

'Asking a tiger not to eat you'

Andrew Kaung says that from the inside of TikTok and Meta it felt really difficult to make the changes he thought were necessary.

“We are asking a private company whose interest is to promote their products to moderate themselves, which is like asking a tiger not to eat you,” he says.

He also says he thinks children’s and teenagers’ lives would be better if they stopped using their smartphones.

But for Cai, banning phones or social media for teenagers is not the solution. His phone is integral to his life - a really important way of chatting to friends, navigating when he is out and about, and paying for stuff.

Instead, he wants the social media companies to listen more to what teenagers don’t want to see. He wants the firms to make the tools that let users indicate their preferences more effective.

“I feel like social media companies don't respect your opinion, as long as it makes them money,” Cai tells me.

In the UK, a new law will force social media firms to verify children’s ages and stop the sites recommending porn or other harmful content to young people. UK media regulator Ofcom is in charge of enforcing it.

Almudena Lara, Ofcom's online safety policy development director, says that while harmful content that predominantly affects young women - such as videos promoting eating disorders and self-harm - have rightly been in the spotlight, the algorithmic pathways driving hate and violence to mainly teenage boys and young men have received less attention.

“It tends to be a minority of [children] that get exposed to the most harmful content. But we know, however, that once you are exposed to that harmful content, it becomes unavoidable,” says Ms Lara.

Ofcom says it can fine companies and could bring criminal prosecutions if they do not do enough, but the measures will not come in to force until 2025.

TikTok says it uses “innovative technology” and provides “industry-leading” safety and privacy settings for teens, including systems to block content that may not be suitable, and that it does not allow extreme violence or misogyny.

Meta, which owns Instagram and Facebook, says it has more than “50 different tools, resources and features” to give teens “positive and age-appropriate experiences”. According to Meta, it seeks feedback from its own teams and potential policy changes go through robust process.

https://www.bbc.co.uk/news/articles/c4gdqzxypdzo

Re: The New Reality

Re: The New Reality

This age verification issue needs a lot of work if ever to be successful - how can you prove your age to a computer?

So far if a website asks are you over the age of .. click yes and you're in!

At least if you try in person to buy a packet of fags (only kids can afford them) or a can of lager, there is a good chance the vendor will see you only 5 years old. How does a computer do that?

Scan the birth certificate? Well, there are ways and means of easily overcoming such minor obstacles.

There is little or no control over the internet, there never will be any effective control - it's not possible.

So far if a website asks are you over the age of .. click yes and you're in!

At least if you try in person to buy a packet of fags (only kids can afford them) or a can of lager, there is a good chance the vendor will see you only 5 years old. How does a computer do that?

Scan the birth certificate? Well, there are ways and means of easily overcoming such minor obstacles.

There is little or no control over the internet, there never will be any effective control - it's not possible.

Re: The New Reality

Re: The New Reality

you can't. with the technical handiwork, that is even all but new, it is impossible to know or check material is correct or just fantasy. and who is not that handy in it fix it on your own, you can order it for a few bucks.

what makes it even more simple is how people trained in putting believe over knowing too. and most who want to play you are not even very sophisticated material.

also how long will it take before there is no need to identify yourself even, such things are already in working order, of course no one asked you how you feel or if you want to be part of a virtual entity, if you are stupid enough to put your face on the net, there are already companies, like clearview, registered in the land of the free still also known as the usa, that simply take your pictures put it in a database, and no it is not a dream all other content they can link back to you will glued to it, and that can be quite a lot, from names, addresses, complete familylines, the devices you have used at least once, where you shop, what you buy, age, date of birth and so many more.

clearview just got the highest fine from a dutch government organisation, as stated in the news for grabbing picture also from dutch peope and other europeans without their consent, and sell them to be used in databases that work from face recognition,

not even that long ago, not only the parties often named the intelligence services, or the secret services, and the dutch police been buyer of stuff of this company.

original dutch news article: https://nos.nl/artikel/2535633-boete-van-privacywaakhond-voor-verzamelaar-van-miljarden-foto-s-van-gezichten

well france gave them a large fine too, but they just would not react or pay. so as it is bases in the land of the free they can easily escape such things. but the free people there seems not to bother much about it.

and it is not a conspiracy how you can make use of all traces you leave on the web at least once. it was easier for some years, i know that, because i do some freelance stuff in finding dirt on people needed in court cases. nowadays most browser do not index twitter/x and facebook that much, and most social media are just dug up with more a overview over exact wording in a message used, or the message itself. so at least in europe it gets a bit more time consuming as it was, but still doable.

and it does not matter the law states you cannot use face recognition, what is only part of the full story, i mean you put out that picture for all to see, so that means you would not mind really who would look at it, but when it is taken out of its original context in itself it is only a picture, wrong, it is not that much about the picture, it are the data used behind it.

and as all usually is done by ai thingies, there is very little done like checking, like the german court reporter found out.

so yes, it can easily give you a completely ridiculous untrue background, and such databases are used everywhere.

and it is really hard to stay out of the limelight for such find you things. datamining is quite a hobby for governments, international befriended governments and commercial enterprises.

and most people have simply to mush streaks of honesty when filing out online forms, without questioning why a company needs your birthday to send you a news letter, or needs to know your gender. all will have a grat write up that it is for giving you the best experience, or service, rubbish, it are data and data are commodities to make money from.

and there seems to be also still a large group of people who show their not so advanced outlook on the virtual reality, that when you ask to become part of a group, simply look who you want them to think who you are of social media like face book, twitter, instagram, etc. that life in a second reality that nasty people have empty profiles, and good people have very full profiles.

having lots of friends on such accounts works great.

but how difficult woulds it be to make a full fake identity on such account, all you need is a bit of time and the want to make one.

on the other hand people who think they can outsmart being found out their true identity make often very silly mistakes, making a mess of your name, but having your family commenting all over a page makes it so much easier. friends you always can buy too, or just take all the page suggested to you. putting up pictures of gatherings in real life that have other people in it means you are offering them to the face miners too.

and even when we know now very well things put in the internet are not out there forever, al lot will end up somewhere without your consent or knowing it does. the only rule in that matter seems, that if you need it, it usually is gone, what you do not want to stay there keeps for long times.

the latest hype in giving consent , without you are informed why is in the eu to decide if they can bother you with betting adds, so all who dare to lie they are over 24 years, are giving their consent to get such adds, and the way back is hard to find or none existent at all. if you lie you are not pass 24, you get simply a fresh round of fake games you must have.

you have to be over 18 here for fags and drinking, you are a full adult at 21, everyone who is filtered out at the till by the eyes of the retailer and is under 25 have to show proof of identity. and do not make the mistake to bring on your visit under 18 humans with you, most retailers do not sell you any of the stuff too. some even in a fashion of being holier than the pope, so buying these horrible cherry chocolates would not get them.

still because the threshold in adds are set so low for far too many things, you easily are places in a subgroup. the world of adds is not very open minded, or inclusive as they often like you make believe. sometimes, depending on the mood of the day, that makes it silly, utterly stupid or just quite offensive.

also most are usually overly late on the party, or as the dutch say, find the dog in the pot. i simply can not think why they do not get, that i indeed use the internet sometimes if i need something, are able to find what i need, go out and simply buy it.

and that this means i do not have to buy it again for quite some time, or maybe never again.